Controversial_Ideas , 5(2), 2; doi:10.63466/jci05020002

Article

Science Is the Thing. Why and How to Restore Balance Between U.S. Institutional Review Boards and Investigators

1

Radiology and Biomedical Imaging, Yale University, New Haven, CT 06520, USA

2

Psychology, Rutgers University, Livingston Campus, Piscataway, NJ 08854, USA; jussim@psych.rutgers.edu

3

Neurobiology, University of Chicago, Chicago, IL 60637, USA; pmason@uchicago.edu

4

American Enterprise Institute, Washington, DC 20036, USA; slsatel@gmail.com

*

Corresponding author: evan.morris@yale.edu

How to Cite: Morris, E.D.; Jussim, L.; Mason, P.; Satel, S. Science Is the Thing. Why and How to Restore Balance Between U.S. Institutional Review Boards and Investigators. Controversial Ideas 2025, 5(Special Issue on Censorship in the Sciences), 2; doi:10.63466/jci05020002.

Received: 16 March 2025 / Accepted: 18 September 2025 / Published: 27 October 2025

Abstract

:In the United States, the Institutional Review Board (IRB) derives its power from the 1978 Belmont Report and the (Revised) Common Rule, effective in 2019, that propagates its authority to multiple federal agencies including NIH. The IRB serves as the local oversight committee protecting human subjects in social science and biomedical research. But how much protection is enough? And at what cost? We review several historical and modern cases as a means of illustrating the evolution of the IRB and its invasiveness. The cases correspond loosely to distinct eras in history that have been termed by Moreno, “Weak Protectionism,” “Moderate Protectionism,” and “Strong Protectionism.” We believe we have now descended into an era of “Hyper-Protectionism” in which the costs to science far outweigh the benefits to protection of human subjects. In response, we propose a set of guiding principles, the “Mudd Code,” aimed at restoring the balance between oversight and research efficiency and productivity.

Keywords:

Mudd Code; Belmont Report; respect for persons; IRB; bureaucratic overreach; overregulation; regulatory burden; human subjects research; self-experimentationIntroduction

In 1974, the Belmont Report laid out a set of guiding principles designed to protect human research participants in US-based experiments from undue or unjustifiable harm. Broadly stated, the principles were predicated on a balancing of risk and benefit. The Institutional Review Board (IRB) for federally supported research was then codified into being 35 years ago the local agent to oversee adherence to the Belmont Report’s principles. The standards used by IRBs to discharge their responsibilities have evolved – and expanded. It is the opinion of the authors that the IRBs have expanded their control and scope beyond what was originally conceived – even given the egregious violations of ethical norms hat drove the need for the Belmont Report in the first place. Today, a drive to eliminate all risk and to preclude all potential criticism of the institution seems to animate the IRB. These factors have led to bureaucratic overreach which, in turn, derails the mission of research institutions. Overreach has moved biomedical research on humans far from the letter and spirit of the Belmont principles and in doing so, frustrated researchers, alienated willing participants, and impeded scientific progress.

Here, we recount examples from both recent and more distant history – some of them personal experiences – to illustrate the evolution and encroachment of overreach into different areas and types of research. We believe that IRBs are unnecessarily obstructive, acting in areas where they do not belong and in ways that are dismissive of investigator needs and participant wishes. They can even be manipulated for sinister and ideological purposes. In brief, they need to get back to basics. After examining some possible explanations for the overgrowth of the IRB’s purview, we conclude by proposing a new set of ten general principles – the Mudd Code – to guide actions and counteract bureaucratic overreach that is now endemic in IRBs. The principles are grouped into three main categories: transparency, justification of risk evaluation, and limits of risk reduction. The code is not a refutation of the Belmont Report but rather intended to exist in harmony with it – even to strengthen it. Our code’s principles are designed to promote predictability, accountability, and consistency, while minimizing delays, investigator confusion, frustration, and make-work. We believe that if institutions adopt the Mudd Code, IRBs can better and more efficiently discharge their obligations to human subjects while restoring balance between minimizing risk to human subjects and fostering life-giving biomedical research in a timely manner.

Historical Background

IRBs exist to assess risks assumed by human subjects in scientific experiments and to prevent subjects from being exposed to potential harms without their consent. This is a vital responsibility. The IRB owes its existence to The Common Rule (45 CFR 46, n.d.), first adopted in 1991 by multiple federal agencies (hence the term “Common”) to assure the protection of human research subjects (Common Rule, n.d.). The Rule specifies that IRB committees include at least five members and that at least one member be unaffiliated with the institution. If vulnerable classes, (e.g., prisoners, people with mental illness) are included in a study, then a special advocate for that class of subjects must be added to the IRB. Beyond those requirements, each institution has latitude in selecting the member composition of its IRB.

The IRB reviews all human research protocols submitted for work that will be conducted at the university or research institution. Projects involving human subjects, even those confined to surveys, focus groups, and qualitative interviews are subject to IRB approval. The definition of human research can be found at the National Institutes of Health (NIH) website on Human Subjects (NIH, n.d.). The governing document for IRBs in the United States is the Belmont Report (1979), which introduced three governing principles: Respect for Persons (aka Autonomy), Beneficence, and Justice (Belmont Report, 1979).

The principle of autonomy, which regards the centrality of the individual in decision-making, relies heavily on informed consent. Beneficence relates to the balancing of risk and reward. Justice is an aspirational concept that demands that all populations – however defined – who might serve as research subjects be eligible to benefit from the research. The implementation of these general principles is not explicitly enumerated for every scientific experiment. Doing so would defeat the purpose and strength of agreed-upon general principles. Nonetheless, it is the difficult task of the IRB to review and approve or reject every protocol based on the three broad principles in the Belmont Report. The Belmont report, in turn, is a synthesis of the ten items that make up the Nuremberg Code (see Appendix A), written in 1948 in the aftermath of the Holocaust and the medical atrocities committed by Nazi doctors. In the interpretation of Schupmann and Moreno (2020), however, there is a significant difference in orientation between the Nuremberg Code and the Belmont Report. Whereas the Nuremberg Code was initiated by investigators to guide the self-regulation of investigators, the Belmont Report was written in response to a growing perception by the public that experimenters were not self-regulating. As such, the Belmont Report is more protectionist in its outlook. It introduces the concept of “vulnerable classes” and the dependence on outside review.

There are inevitable tensions between the needs and expectations of investigators, on one hand, and the IRB on the other. Investigators are eager to act on their ideas. They are usually required to have IRB approval in place before they can accept NIH funds – even for grants that have been provisionally awarded. After accepting funds, investigators come under pressure to spend them quickly and appropriately lest NIH ask for the balance to be returned at the end of the year. But each time a protocol is amended for a scientifically justified change, no matter how minor, the IRB must review and reapprove. In the interim, the proposed change in a protocol cannot be implemented; if the change is needed to continue the project, all new data collection must wait until approval is granted. The balance of power favors the IRB. Without its approval, investigators must sit on their hands.

Overinterpretation of federal rules compounds the problem. The regulations in 45 CFR 46 (HHS regulations for the protection of human subjects in research) require IRB review only for federally funded research and research by federal employees (45 CFR 46, n.d.). In the case of research that has no federal funding, the imposition of IRB review constitutes a choice by research institutions, not something that is mandated. In 2006, Shweder noted that the federal government gives institutions receiving federal funding the opportunity to opt out of applying federal regulations to non-federally funded research. At the time that Shweder was writing, at least 174 institutions had taken that option (Shweder, 2006).

Over time, protocols – whether federally funded or not – have grown in length and scope. Aspects of research planning not considered worthy of IRB review ten years ago are now subject to increasing scrutiny and sometimes lengthy review. Mission-creep means that reviews take longer and delve into areas that are beyond the scope of the IRB as originally enumerated in the Belmont Report. The latitude given to IRBs results in wide variation in the assessments of risk and potential harm. We believe that the balance of power between the IRB and the investigator at many institutions is out of kilter.

How Can We Restore the Equilibrium?

It is instructive to consider illustrative episodes of scientific achievement that predate the existence of the IRB, or that coexisted with the pre-modern IRB, and compare them with current episodes in which more expansive IRBs have scuttled plans for scientific investigation. (This select list is representative of our central themes and not intended to be exhaustive.) The evolution of the IRB and its reach and observable trajectory lead to our recommendations for restoring the balance between the need to protect subjects – as determined by the IRB – and the needs of investigators to conduct good and timely science. The whole scientific enterprise rests on scientists pursuing their interests with the ultimate aim of benefiting the public.

Historical Cases

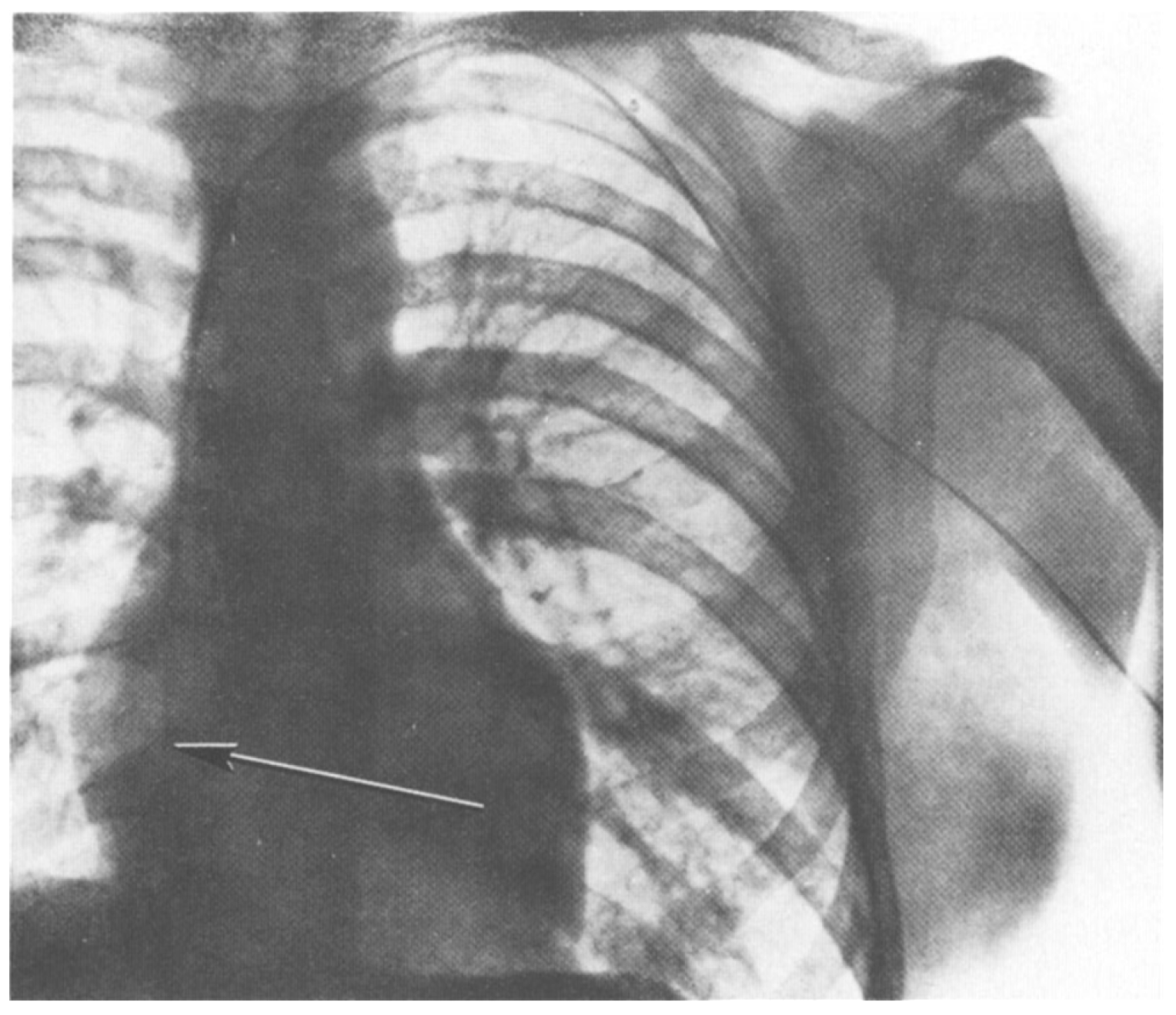

In 1929 at the Auguste-Viktoria Hospital in Eberswalde, Germany, Dr. Werner Forssmann fed a catheter through his arm vein all the way to his heart. He recorded this feat on x-ray (using an x-ray machine one floor above his own lab; see Figure 1). The x-ray shown in Figure 1 documented Forssman’s self-experiment and was published that year (Forssmann, 1929). This daring work, for which Forssmann was eventually awarded the Nobel Prize in Physiology or Medicine, ushered in the field of cardiac catheterization. The story of Forssmann’s experiment is retold in a recent article on overregulation (Morris, 2023) and in greater detail in a book on self-experimentation (Altman, 1998).

Figure 1.

X-ray of W. Forssmann’s torso after self-catheterization. Catheter shown on x-ray from original publication (Forssmann, 1929). From the original figure legend, “the catheter passes directly inward from the left arm, under the clavicle at the chest wall, and makes a downward bend at the place of junction with the jugular vein, lying near the margin of the great-vessel shadow and the shadow of the spine, and reaching as far as the right atrium. On another passage, the catheter did not reach any further than this. “I watched carefully for any other effects, or signs of irritation of the cardiac mechanism, but could not identify any. In our institution there is a considerable distance between the operating rooms and the x-ray unit. To go from one to the other I had to climb staircases on foot and return, while the probe was lying within my heart, but I was not aware of any unpleasantness. Passage and removal of the catheter were entirely painless, accompanied only by the above-mentioned sensations. Later on, I could find no sequelae to the procedure…” (Forssmann translated by Meyer, 1990).

Figure 1.

X-ray of W. Forssmann’s torso after self-catheterization. Catheter shown on x-ray from original publication (Forssmann, 1929). From the original figure legend, “the catheter passes directly inward from the left arm, under the clavicle at the chest wall, and makes a downward bend at the place of junction with the jugular vein, lying near the margin of the great-vessel shadow and the shadow of the spine, and reaching as far as the right atrium. On another passage, the catheter did not reach any further than this. “I watched carefully for any other effects, or signs of irritation of the cardiac mechanism, but could not identify any. In our institution there is a considerable distance between the operating rooms and the x-ray unit. To go from one to the other I had to climb staircases on foot and return, while the probe was lying within my heart, but I was not aware of any unpleasantness. Passage and removal of the catheter were entirely painless, accompanied only by the above-mentioned sensations. Later on, I could find no sequelae to the procedure…” (Forssmann translated by Meyer, 1990).

What are we to make of Forssmann’s actions? Reckless? Uninformed? Forssman’s boss had instructed him not to perform the self-experiment (Altman, 1998). So, we could characterize him as insubordinate. On the other hand, he was aware of work by Chauveau and Marey that measured intracardiac pressure by placing a catheter in the heart (Forssmann-Fack, 1997). Forssmann had already done experiments on a cadaver to confirm that his planned self-experiment was reasonable and achievable (see translation of Forssmann paper in Meyer, 1990). He was also familiar with the medical literature and understood the risks he was assuming. Further, Forssmann had a specific scientific and medical goal in mind: to be able to deliver drugs to the heart in the case of a heart attack in a less invasive manner than via intracardiac injection.

There is little doubt that a modern IRB would have objected to Forssmann’s experiment. But why? After all, Forssmann, the human subject, was as informed as any experimental subject could have been. So, the first principle of the Belmont Report, “Respect for Persons,” would dictate that Forssmann’s experiment be allowed. The experiment had bona fide scientific value and was intended to yield useful results for society; it was based on previous work (Forssmann’s and others’) on non-human animals and cadavers; and it was being conducted by a scientifically qualified person. In all respects, Forssmann’s experiment adhered to the principles of the Nuremberg code (#1 informed consent; #3 yield fruitful results; #4 based on animal experimentation; #8 by qualified persons). It is especially interesting to note item #5 of the Nuremberg code in its entirety: “No experiment should be conducted where there is an a priori reason to believe that death or disabling injury will occur; except, perhaps, in those experiments where the experimental physicians also serve as subjects.” The principle in the second clause of item #5 is a provision for self-experimentation. But self-experimentation, per se, did not make it into the Belmont Report. The absence of an explicit dispensation for self-experimentation can be seen as consistent with the distinction of Schupmann and Moreno (2020) between the orientation of the Nuremberg Code (favoring self-regulation by investigators) and the Belmont Report (favoring external regulation).

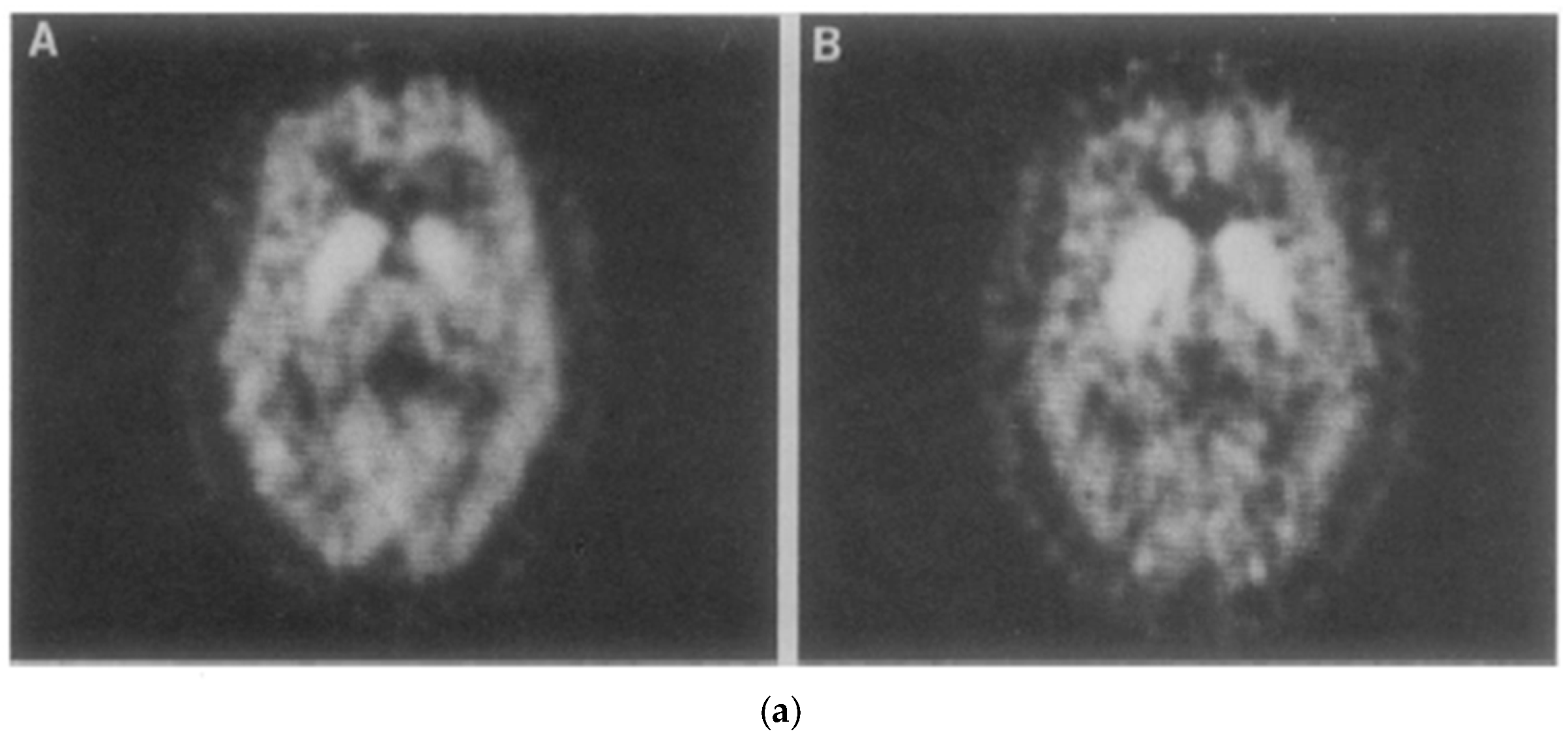

In 1983, Drs. Henry Wagner and Mike Kuhar and colleagues at Johns Hopkins University in Baltimore performed the first ever brain scan of functioning dopamine receptors in a living primate brain. The imaging modality was positron emission tomography (PET), a form of nuclear medicine, that requires the injection of a molecule labeled with a beta emitter, that is, radioactivity. The primate was a baboon. At the last minute, as the authors imagine it, the investigators decided to image one of themselves as well. So, Wagner jumped up on the scanner, a colleague injected him with the same radioactive tracer that binds to dopamine receptors as used in the baboon, and the rest is history (see Figure 2). The legend of the original figure reads in part, “An awake 56-year-old Caucasian male was positioned in the PET scanner”. The text says unambiguously, “after the baboon … [the] experiment was performed on one of us.” There is no mention in the published article of a procedure for recruiting human subjects, an approved IRB protocol, or a consent form of any kind. That is very likely because none of it existed, nor did the investigators think they needed it (Wagner et al., 1983). The resulting paper in Science is a landmark paper in the field of PET brain imaging. The work ushered in the use of PET for imaging receptors and enzyme molecules, in vivo, which has implications for understanding basic neurochemistry, neuropsychiatric disease, as well as use in drug development. So, like the Forssmann experiment, it is fair to say that the human subject was eminently informed of the risks, the experiments were based on prior animal experiments, and the work was likely to (did in fact) yield valuable results. To have disallowed it would have run counter to “Respect for Persons” and to multiple tenets of the Nuremberg Code (see Appendix A).

Figure 2.

(a). PET scans of dopamine D2 receptors Henry Wagner’s brain (Wagner et al., 1983). A Baltimore Sun (Scientists Observe, 1983) article showing (b). M. Kuhar (left) and H. Wagner (right) looking at a PET scan of the latter’s brain.

Figure 2.

(a). PET scans of dopamine D2 receptors Henry Wagner’s brain (Wagner et al., 1983). A Baltimore Sun (Scientists Observe, 1983) article showing (b). M. Kuhar (left) and H. Wagner (right) looking at a PET scan of the latter’s brain.

Modern Cases

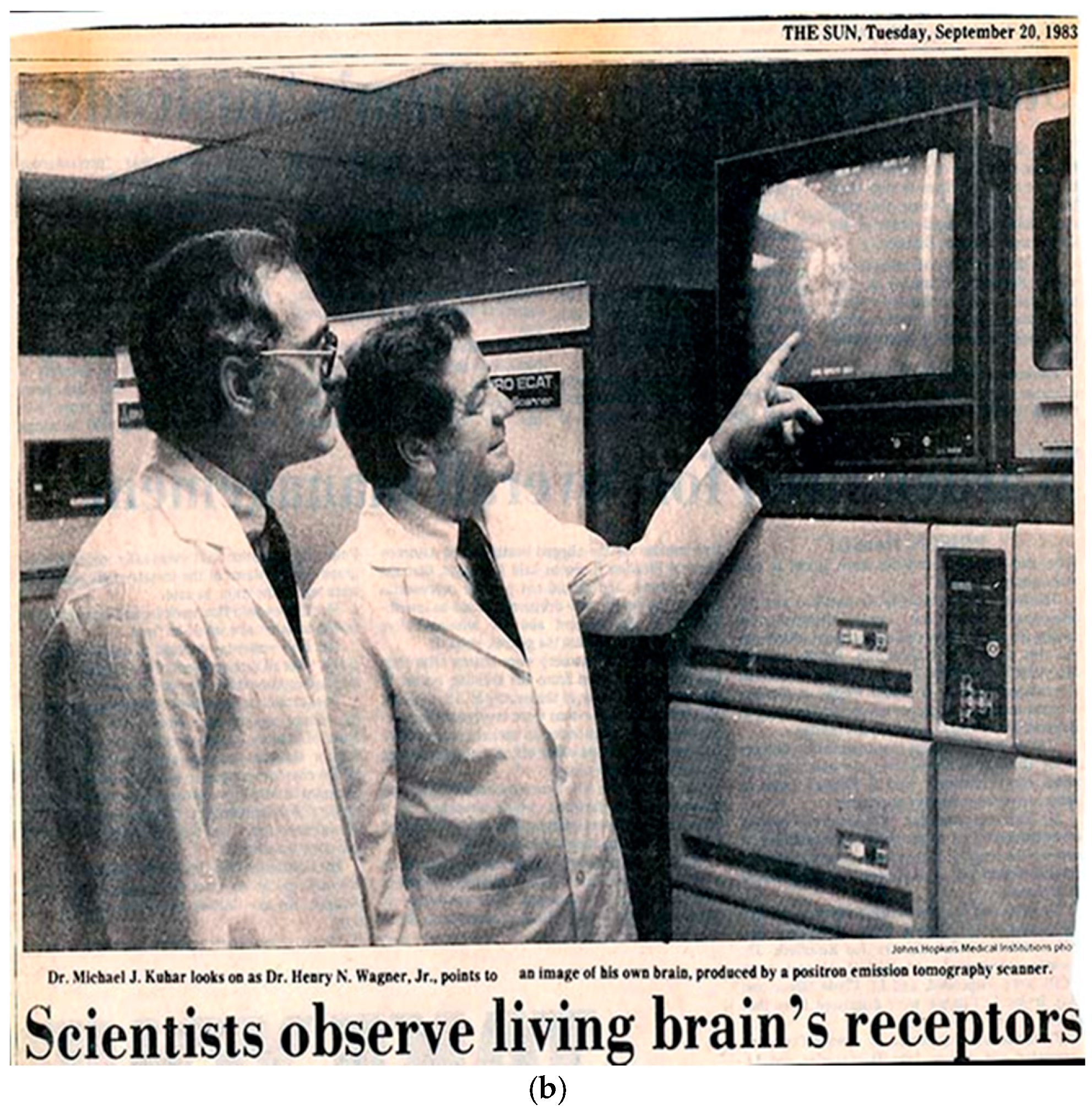

In 2006 at the Indiana University Hospital in Indianapolis, in the age of the modern IRB, one of this paper’s authors (EDM) hoped to have his Henry Wagner moment. He submitted an experimental protocol for approval to the Indiana University IRB to allow him to participate as a healthy control subject in a newly funded project to study the responses of dopamine and dopamine receptors to administrations of expected and unexpected intravenous (iv) alcohol (see Figure 3 for schematic showing delivery of iv alcohol, odors of alcohol, and matched visual cues to a subject in the PET scanner.) Visual and olfactory cues are used to manipulate the participant’s expectation of alcohol. To add additional educational value to the experiment, the author explained that he intended to perform the experiment in the presence of his students from his Medical Imaging class. All the necessary documentation was provided prior to the start of the spring semester class. To the author’s dismay, the IRB approval was not issued until after the end of the semester thus extinguishing any possibility of immediate educational value. To the further dismay of the author, one of the questions that the IRB review committee posed (and which delayed approval) was, “How will the investigator consent himself?”

Figure 3.

Schematic of the experiment that the author designed with colleagues at Indiana University Medical School to investigate the dopaminergic response of the human brain to expected and unexpected alcohol. Alcohol is delivered intravenously; olfactory and visual cues are manipulated to alter expectations. It had been the hope of the author to secure IRB approval in time for the students in his Medical Imaging class to observe a trial run of the new experiment in which the investigator would serve as a volunteer subject.

Figure 3.

Schematic of the experiment that the author designed with colleagues at Indiana University Medical School to investigate the dopaminergic response of the human brain to expected and unexpected alcohol. Alcohol is delivered intravenously; olfactory and visual cues are manipulated to alter expectations. It had been the hope of the author to secure IRB approval in time for the students in his Medical Imaging class to observe a trial run of the new experiment in which the investigator would serve as a volunteer subject.

Like Forssmann and Wagner before him, it is reasonable to expect that the author understood the risks associated with the experiment that he, himself, had designed and submitted successfully for NIH funding. Regrettably, but not uncommonly, this episode points to additional issues that arise with IRB review. First, the IRB committee too often confuses procedure with principle. Informed consent is not embodied in the act of informing a subject; rather, it depends on the state of the subject’s being informed (and then choosing to consent). Second, delay can be costly. Although the work by the author and his colleagues on this project eventually led to what they believe to be the first demonstration of dopamine changes in the living human brain as a result of “negative reward prediction error” (Yoder et al., 2009), the students played no part and cannot even say they were in the room when it happened.

Mundane Cases

Some of the most common examples of IRB overreach can be the most frustrating. Scientists engaging in research with human subjects regularly receive notices from their IRB that the approval for their work has expired or will be expiring soon, and critically, unless the IRB proposal is renewed, all work on the project must cease (Shweder, 2006). From where does the authority of this “cease and desist” type of order come? If the work is not federally funded, it does not come from the government. As Shweder (2006, p. 512) stated:

[T]he federal government has no authority to tell researchers who do not apply for federal grants to stop talking to people, associating with people, recording the things people tell them, thinking about the things people tell them, or writing it all up and publishing it. That would be a blatant transgression of various protected First Amendment liberties (of association, speech, and press).

One of us (LJ) has had many interactions with the Rutgers IRB in seeking approval for social science research that is not federally funded. In these circumstances, there are no competing authorities (like a local radiation safety committee as is often the case with PET imaging) that would have reason to limit overall enrollment. Yet, the Rutgers IRB regularly instructs researchers to cease lest they collect more participants than originally planned in the protocol. We believe this demand for stoppage of social science work is an overinterpretation of the IRB’s mandate.

At Yale in 2020, one of us (EDM) submitted an amendment to an existing experimental protocol to the IRB. The amendment was simple. It sought approval for the use of a new PET scanner which had recently replaced an old one at the Yale PET Center. In response to this straightforward request, an IRB committee member took the added precaution of advising the investigator that if he also intended to replace any magnetic resonance imaging (MRI) scanners, he would first have to secure approval of a committee at the MR Center. Yet, a new PET scanner and a new MRI scanner are wholly unrelated. The comment from the IRB reviewer is akin to instructing someone in line for a new driver’s license at the department of motor vehicles (DMV), that should they also plan to get their marriage annulled, they would have to go to a separate governmental agency. This might sound like a triviality. However, it has real and negative consequences. Each response of an IRB member to a new protocol or an amendment requires a formal response from the investigator and subsequent IRB evaluation of that response. Each of these steps adds up to long and longer delays.

Mission Creep

Although we favor a system of risk assessment that gives greater deference to the investigators, we endorse the principles of the Belmont Report, as well as the IRB, which is the mechanism by which the mission of the Belmont Report is executed in the interest of the public. But the preceding examples of IRBs-gone-wild reflect overall IRB mission creep. Mission creep – even if the result of good intentions – negatively affects the progress of research and science. First, dealing with an IRB with an ever-expanding mission can be unpleasant and overly litigious. If one never knows what the IRB will question or reject and their reasoning, or where their jurisdiction and authority ends, then investigators must act defensively, and hyper-legalistically. They must try to anticipate all possible ways the IRB might take exception – even wacky ways. This is unnecessarily time-consuming and futile. Scientists should not have to be lawyers to prove they are performing ethically. Second, an ever-expanding jurisdiction makes dealing with the IRB deflating for scientists who are eager to do their work and test their hypotheses. Usually, this means acting within a finite window of opportunity. If the IRB at the investigator’s institution is too slow or obstructionist, the window of opportunity may expire. The time frame for an experiment is often restricted because of funding. A particular human subject or group of subjects may be available only for a finite period. Inordinate and illogical delays also sap an investigator of enthusiasm, even to the point of giving up. The ultimate loser is the public, deprived of good science.

One of us (PM) recently recounted two cases of IRB delay and rigidity that reinforce the cases cited above (Mason, 2024). The first case involved a highly unusual event. Subjects were students who had been struck by lightning on a hiking trip. The scientific question was whether lightning strikes permanently alter nerve conduction. The window of opportunity was short because the students were only going to be on campus for a limited period. Despite vigorous attempts, IRB approval for the protocol never materialized. “The project and our enthusiasm for it lay dead,” Mason wrote, “slain by the Byzantine process of IRB approval” (Mason, 2024). Delays can be costly. The cost is paid in closed windows of opportunity and in extinguished investigator enthusiasm.

IRB Violations of the Belmont Report (Dis-Respect for Persons)

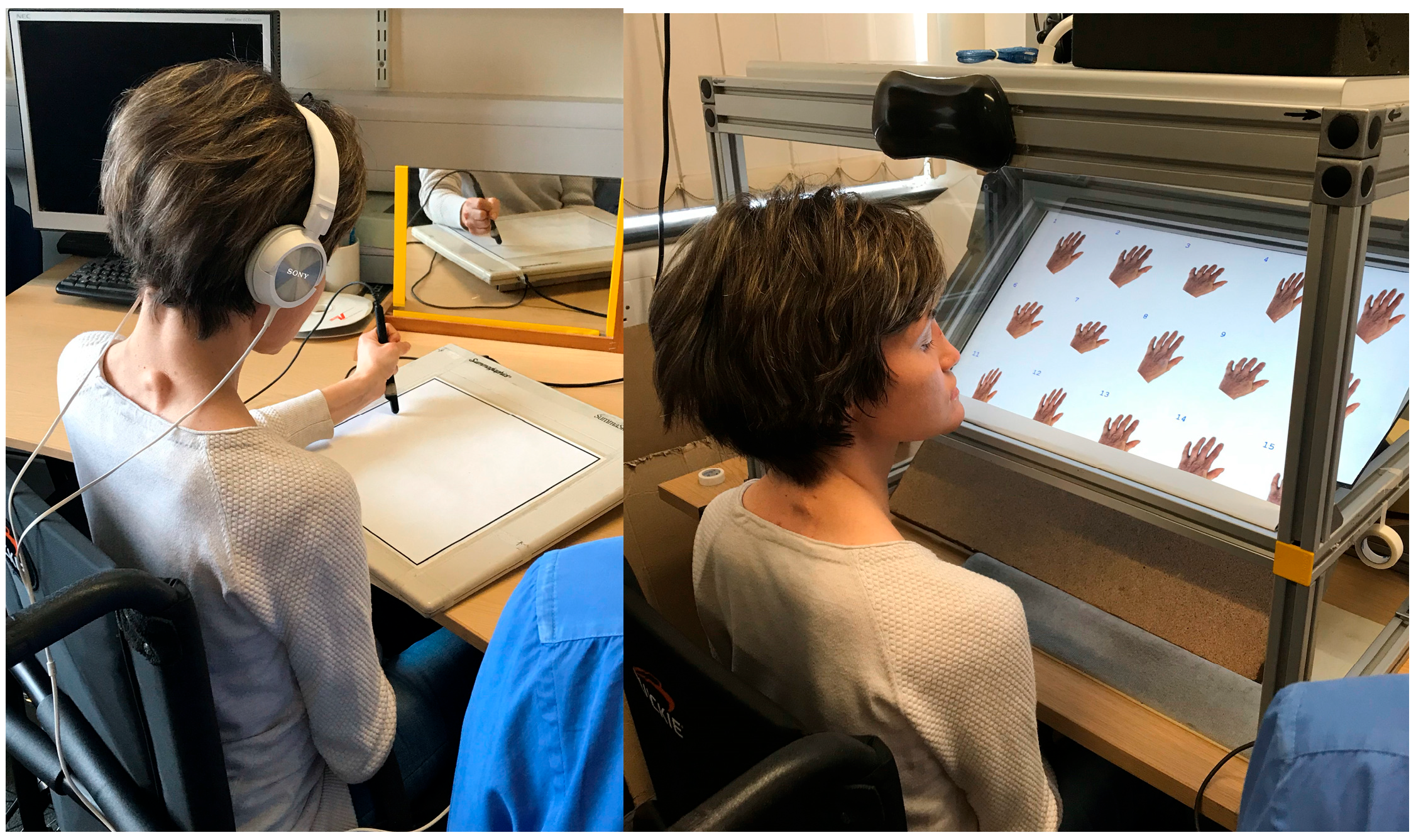

In describing a frank violation of Respect for Persons, author PM recounts her experience with Kim, a research participant who lacks the ability to sense touch or pain. Kim is an enthusiastic participant in medical research on her condition (Figure 4). She is eager to bring light to her condition, and to help put a human face on it. She prefers being referred to as Kim rather than the more traditional KS in scientific publications about her. Unfortunately, one of the IRBs overseeing the research of PM was unmoved by Kim’s informed and articulate argument (Kim is a law school graduate and writes knowledgeably and with obvious comprehension) to having her anonymity uncloaked. The IRB insisted on Kim being referred to as AA. This IRB action flies directly in the face of “Respect for Persons.” Kim is the person in question. She seeks to contribute to medical science as a participant. She seeks to have her name associated with her condition. She is fully informed. How does refusing her request and insisting on her being labeled “AA” in publications demonstrate respect? It does the opposite.

Figure 4.

Kim is shown participating in somatomotor experiments to understand how her inability to feel touch or sense movement affects her ability to write in a mirror and her perception of her hands. Results from these studies can be found in Miall et al. (2021a, 2021b). Photos (care of P Mason) used with Kim’s permission.

Figure 4.

Kim is shown participating in somatomotor experiments to understand how her inability to feel touch or sense movement affects her ability to write in a mirror and her perception of her hands. Results from these studies can be found in Miall et al. (2021a, 2021b). Photos (care of P Mason) used with Kim’s permission.

Respect/disrespect for persons has implications that go far beyond one volunteer’s desire to bring light to her condition. Consider challenge trials: an investigator administers a vaccine under development to volunteers and then infects them with a pathogen, usually a virus. Researchers select a small number of individuals with the explicit understanding, based on laboratory findings, that the risk of developing serious illness is low. The odds of permanent injury or death are yet lower. A second important premise of the study is that the results from such an intervention could not be easily obtained from other investigations. An obvious virtue is that researchers need not wait for the population to be exposed to a pathogen naturally. Challenge trials can hasten vaccine development and save thousands of lives in the midst of an outbreak.

Challenge trial participants could be considered as modern-day versions of self-experimenters. While volunteers are not investigators, per se (à la Forssmann or Wagner), they knowingly expose themselves to considerable risk and there is an urgency that drives their decision. Challenge studies have proved safe in practice. Between 1980 and 2021, over 15,000 people have participated in a human challenge study for one of over two dozen different diseases, including Zika, cholera, malaria, and COVID-19 – with zero recorded deaths, and only a handful of cases requiring emergency medical care (Adams-Phipps et al., 2023).

The need for a rapid response to COVID-19 brought the matter of challenge studies to the fore in 2020. But how to justify such studies? In support, the 1DaySooner organization (including many Nobelists) published an open letter on July 15, 2020 (US Open Letter, 2020). One of us (SS) was an initial signatory. It is instructive to note the letter’s emphasis on autonomy:

The autonomy of the volunteers is of paramount concern. This means that the informed consent process must be robust (e.g. no children, no prisoners, multiple tests of comprehension). It also means that the wish of informed volunteers to participate in the trial ought to be given substantial weight.

Sinister Cases

Much of the fallout of the IRB’s actions that cause delays in studies and the dampening of investigators’ enthusiasm might be generously attributed to good intentions albeit clumsily applied. But there are also instances in which IRBs have been commandeered by bad actors for malicious purposes. Author LJ and collaborator Nathan Honeycutt have written about their Kafkaesque trials during six months and three investigations into their social science work by the aforementioned Rutgers IRB and an IRB-appointed outside investigator (Jussim & Honeycutt, 2024). LJ and Honeycutt’s work explores the degree to which academics endorse extreme political and cultural views. To succeed, these investigators must ask questions that may seem extreme to some. The investigators suspected that they were investigated for asking questions that offended one of their participants because of his/her politics. What they knew for sure was that someone complained to the Rutgers IRB about being subjected to “biased” questions, and this sparked an investigation requiring hours of meetings and extensive documentation to prove that the investigators had adhered to the approved protocol.

Jessica A. Hehman and Catherine Salmon of the University of Redlands (both former chairs of the IRB) have similarly written about cases of IRB misdeeds that were explicitly intended to thwart research due to an overzealous desire to protect subjects, either for ideological reasons, or to protect the university’s reputation (see Hehman & Salmon, 2024). In many such cases, the investigations initiated against faculty members seem bizarre on their face but were nevertheless allowed to proceed. So, one must ask, as LJ and Honeycutt did, why is there no means of dismissing unworthy investigations before they do damage?

Where Does the Imbalance Come From?

What are some of the underlying causes of IRB overreach and how can they be curtailed? Hehman and Salmon offer possible explanations for some of it:

- Poor training of IRB members as to what constitutes harm to human subjects.

- IRB members unwilling to set aside their own political likes and dislikes to allow the work of people they suspect do not agree with them to proceed.

- Lack of understanding by IRB members of what is standard practice in a given research field (e.g., social science), such as questionnaires with provocative or intrusive questions.

- Jealous colleagues using the IRB to impede others’ work.

- Universities seeking to protect their own brand rather thanacademic freedom.

How much of the root cause is failure of IRB oversight and how much is “performative ethics” is hard to know. Even a quarter century ago, expert observers expressed concern over the rise of “strong protectionism” in human subject research. That is, the empowerment of review committees (following externally imposed mandates) as the primary guarantors of subject safety as opposed to virtuous scientists and researchers. Moreno (2001) termed the postwar period (1947 – early 1980s) the era of Weak Protectionism. It granted wide latitude to experimenters. He called the period from the early 1980s to the end of the 20th century the era of Moderate Protectionism.

Under Moderate Protectionism, the concerns of the subjects fell equally to the experimenters and to an external oversight body. But in 2001, Moreno stated that the era of Strong Protectionism had arrived. The primary responsibility for the protection of the subjects had passed from the investigator to the external committee. At that time, he worried that by outsourcing the primary responsibility to external committees, scientists themselves would feel less bound to police their own actions (Moreno, 2001). What was perhaps not anticipated in 2001 was two confluent trends that fuel the current environment (in effect since around 2020) which we dub the Hyper-Protectionism era. These two trends underlie the current power imbalance between IRBs and investigators.

The first trend is toward an expanding definition of “harm.” After conducting extensive interviews with IRB members, Klitzman (2015) observed that committee members ignore the regulations that IRBs should not include long-term social harms in their calculations of risk. Specifically, the relevant sentence in the Revised Common Rule (proposed in 2011; published in 2017; in effect as of January 2019) reads:

The IRB should not consider the possible long-range effects of applying knowledge gained in the research (for example the possible effects of the research on public policy). (Federal Register, 2011, 2017).

Klitzman cites Fleischman et al. (2011) as claiming that despite the regulations, IRBs do consider social risks and then delay or even block the research in question from proceeding. This expanding definition, or increasing willingness, to find harm may be explained by a phenomenon that Levari et al. (2018) termed, “prevalence-induced concept change in human judgment.” As part of their work, Levari et al. convened mock IRBs and supplied them with collections of faux proposals to review. As the prevalence of unethical proposals was reduced in the collection, committee members persisted in flagging the same percentage of proposals as unethical. Reviewers appeared to expand their own definitions of unethical (Levari et al., 2018).

The second trend is a marginalization of the judgement of the faculty in decision-making in the university. Why must faculty at Yale (or anywhere) get permission before they can spend money from their own grants for grant-related purchases and expenses that they, themselves, budgeted? Perhaps to mollify ever-growing bureaucracies. At Yale, where a report exists, we know that the numbers of administrators and layers of administration have swelled (since 2003) – all while the size of the faculty has stagnated (Size and Growth, 2022). As more decisions must be countenanced by more administrators, scientific research is inevitably slowed.

“Balance” implies the integration of opposing considerations. There are presently few forces that limit the IRB other than a very vocal investigator. Just as investigators must balance risks against potential rewards in the conduct of their research (“Beneficence”), so IRBs must be persuaded to balance the marginal value of additional constraints placed on investigators with the real costs in delay of research and the possibility that excessive delays lead to abandonment of the work.

Proposal to Reduce IRB Overreach

What is lacking from IRB review as currently configured is a set of principles that protect the forces that favor allowing the research to proceed yet remain in balance with protection of human subjects.

Below, we offer ten principles, and a recommendation to amend the prescribed composition of IRBs, which could help counter IRB overreach and make less likely both the misuse and the demoralizing abuse of the IRB machinery. The intent of these principles is that they be general enough to apply broadly across scientific fields and institutions. We envision the principles serving as a counterweight to the growing inclination of IRBs to overinterpret their mandate as one of total elimination of actual harm or perceived harm without regard to its effect on the proposed science.

As homage to the Belmont Report, named for the Belmont Conference Center where it was conceived, we refer to the following ten principles as the Mudd code, named for the Seeley G. Mudd auditorium at USC where they were first enunciated. The ten principles are organized into three overarching ideas: Transparency (of IRB procedures), Justification of Risk Evaluation (which must be faithful to the Belmont Report and the Nuremberg Code), and Limits of Risk Reduction (to preserve and prioritize the science).

The Mudd Code

- TRANSPARENCY

- All IRB deliberations should be transparent. Deliberations should be recorded, and open to the public for viewing in real time or at some later date shortly after they take place.

- All IRB reviews of protocols must be completed rapidly. Three weeks would be in line with the time that journals typically allow for manuscript review.

- All IRB reviewers must be identified by name. All comments and criticisms of a protocol must be attributed to, and signed by, a reviewer.

- Investigators shall provide feedback on the performance of the IRB and its reviewers. Summary statistics and comments on all performance evaluations of the IRB must be made available to the faculty and the public, yearly. Example statistics are:

- Average (and range of) time from initial submission of protocol to final approval.

- Number of submissions, number of approvals within a year.

- Average number of revisions required per protocol.

- JUSTIFICATION OF RISK EVALUATION

- 5.

- All IRB requirements for submitting a protocol must be justified solely on the basis of Autonomy, Beneficence, or Justice, and must be labeled as such. Any IRB requirement that cannot be justified on at least one of these bases must be dropped. All IRB requests to modify protocols and related documents (inclusion criteria, advertisements, questionnaires etc.) must be similarly justified solely on the basis of Autonomy, Beneficence, or Justice and must be so categorized.

- 6.

- Autonomy is paramount. A subject’s informed preferences should be heeded whenever possible. Anything less than maximal deference to the subject is a violation of Respect for Persons.

- LIMITS OF RISK-REDUCTION

- 7.

- Scientific and medical discovery is the ultimate goal and the reason for the existence of the IRB. Total elimination of risk is not the goal. Risk is to be limited for the purpose of balancing it against the institution’s acknowledged role in facilitating and promoting science and the research participant’s desire to help further science.

- 8.

- Practices not exceeding a (predefined) minimal-risk threshold are outside the IRB’s mandate. Such practices are entitled to expedited review. Minimal risk thresholds should be defined by institutions and promulgated widely.

We recommend the following standard: If the risk to participants is no greater than the risk they incur in their daily lives – e.g., from interacting with other people socially, commercially, recreationally or politically – then risk is effectively nonexistent. Once minimal-threshold standards are established, they are to be honored and not to be treated as a matter of personal preference by IRB members or reviewers.

- 9.

- Discipline-specific experts should be consulted whenever the IRB is not constituted with sufficient domain knowledge of the discipline to determine minimal risk standards. Long-accepted non-invasive, minimum risk practices are known to experts in a discipline. Questionnaires in social science research are one example. Non-invasive procedures such as those involved in psychophysical testing fall within this category.

- 10.

- The re-evaluation of peer-reviewed science is not the purview of the IRB. The IRB must restrict its purview to identifying and mitigating the major and significant risks to human subjects given already-approved science. If the scientific content of the proposal has already been reviewed by one or more bodies of appropriate scientific peers (e.g., grant review committees or journal article reviewers) then there is no basis for relitigating the science.

In addition to the above ten principles, we recommend that the composition of IRBs be modified. Just as IRBs must include special advocates for vulnerable classes of subjects (e.g., a prisoner advocate must be part of the IRB if prisoners are participants in a study), a science advocate should be present in all committee meetings to argue on behalf of the value of the science research being considered. This member will represent the benefits of the results of scientific research and thus help IRBs to adhere to principle 7 of the Mudd Code: Scientific and medical discovery is the ultimate goal. Risk is to be balanced with the potential benefit of the experiment. A balance is best struck if advocates for both concerns are in the room.

Conclusion

It is our hope that the Mudd Code will be adopted by IRBs and help guide their members and scientists to amicable, efficient, and timely review of protocols for human subject experimentation. We hope that such a code – based in respect for persons, transparency, accountability of decisions and practices, and recognizing the limits of power and mission of IRBs – will restore balance between the scientist who wants to test an important hypothesis and the (usually) earnest desires of the IRB committee to monitor and minimize risk to human subjects. The transparency that we advocate can help to prevent ideological abuses of the system.

The advance of medical and social science research depends on human experimentation. The research complex, including investigators and administrators, must never break trust with the human volunteers. If that trust is damaged, research could collapse. Trust is built on respect for the volunteer subjects, respect for their safety and equally for their autonomous wishes. But respect for the safety and for subjects is also imperiled when they give of their time to research that fails to go forward.

Author Contributions

Conceptualization, E.D.M. All authors were involved in writing the original draft, editing, and reviewing all subsequent drafts. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

We are grateful to Professors Nicholas Christakis and Kate Stith of Yale for their recommendations on supporting documents for the text. We are grateful to Professor Abigail Thompson of UC Davis for proof-reading assistance. We are especially grateful to a student in Prof. Morris’ Professional Ethics class at Yale for her suggestion to add a science advocate to the IRB.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

The Nuremberg Code1

“Permissible Medical Experiments.” Trials of War Criminals before the Nuremberg Military Tribunals under Control Council Law No. 10. Nuremberg October 1946 – April 1949, Washington. U.S. Government Printing Office (n.d.), vol. 2., pp. 181–182.

- The voluntary consent of the human subject is absolutely essential. This means that the person involved should have legal capacity to give consent; should be situated as to be able to exercise free power of choice, without the intervention of any element of force, fraud, deceit, duress, over-reaching, or other ulterior form of constraint or coercion, and should have sufficient knowledge and comprehension of the elements of the subject matter involved as to enable him to make an understanding and enlightened decision. This latter element requires that before the acceptance of an affirmative decision by the experimental subject there should be made known to him the nature, duration, and purpose of the experiment; the method and means by which it is to be conducted; all inconveniences and hazards reasonably to be expected; and the effects upon his health or person which may possibly come from his participation in the experiment.The duty and responsibility for ascertaining the quality of the consent rests upon each individual who initiates, directs or engages in the experiment. It is a personal duty and responsibility which may not be delegated to another with impunity.

- The experiment should be such as to yield fruitful results for the good of society, unprocurable by other methods or means of study, and not random and unnecessary in nature.

- The experiment should be so designed and based on the results of animal experimentation and a knowledge of the natural history of the disease or other problem under study that the anticipated results will justify the performance of the experiment.

- The experiment should be so conducted as to avoid all unnecessary physical and mental suffering and injury.

- No experiment should be conducted where there is an a priori reason to believe that death or disabling injury will occur; except, perhaps, in those experiments where the experimental physicians also serve as subjects.

- The degree of risk to be taken should never exceed that determined by the humanitarian importance of the problem to be solved by the experiment.

- Proper preparations should be made and adequate facilities provided to protect the experimental subject against even remote possibilities of injury disability or death.

- The experiment should be conducted only by scientifically qualified persons. The highest degree of skill and care should be required through all stages of the experiment of those who conduct or engage in the experiment.

- During the course of the experiment the human subject should be at liberty to bring the experiment to an end if he has reached the physical or mental state where continuation of the experiment seems to him to be impossible.

- During the course of the experiment the scientist in charge must be prepared to terminate the experiment at any stage, if he has probable cause to believe, in the exercise of the good faith, superior skill and careful judgement required by him that a continuation of the experiment is likely to result in injury, disability, or death to the experimental subject.

| 1 | Downloaded from link to the article. |

References

- 45 CFR 46. U.S. Department of Health and Human Services. n.d. Available online: link to the article (accessed 17 September 2025).

- Adams-Phipps, J.; Toomey, D.; Więcek, W.; Schmit, V.; Wilkinson, J.; Scholl, K.; Jamrozik, E.; Osowicki, J.; Roestenberg, M.; Manheim, D. A systematic review of human challenge trials, designs, and safety. Clinical Infectious Diseases 2023, 76(4), 609–619. [Google Scholar] [CrossRef] [PubMed]

- Altman, L. K. Who goes first? The story of self-experimentation in medicine; University of California Press, 1998. [Google Scholar]

- Belmont Report. Ethical Principles and Guidelines for the Protection of Human Subjects of Research; U.S. Department of Health and Human Services, 18 April 1979. Available online: link to the article (accessed 17 September 2025).

- Common Rule. U.S. Department of Health and Human Services. n.d. Available online: link to the article (accessed 17 September 2025).

- Federal Register. Human subjects research protections: Enhancing protections for research subjects and reducing burden, delay, and ambiguity for investigators. Federal Register 2011, 76(143). [Google Scholar]

- Federal Register. Federal policy for the protection of human subjects. Federal Register 2017, 82(12). [Google Scholar]

- Fleischman, A.; Levine, C.; Eckenwiler, L.; Grady, C.; Hammerschmidt, D. E.; Sugarman, J. Dealing with the long-term social implications of research. American Journal of Bioethics 2011, 11(5), 5–9. [Google Scholar] [CrossRef]

- Forssmann, W. Die Sondierung des rechten Herzens [Probing of the right heart]. Klinische Wochenschrift 1929, 8, 2085–2087, [addendum: 8, 2287]. (Translation of title by Meyer, 1990.). [Google Scholar] [CrossRef]

- Forssmann-Fack, R. Werner Forssmann: A pioneer of cardiology. Amercian Journal of Cardiology 1997, 79(5), 651–660. [Google Scholar] [CrossRef] [PubMed]

- Hehman, J.; Salmon, C. How institutional review boards can be (and are) weaponized against academic freedom; Unsafe Science, 30 June 2024. [Google Scholar]

- Jussim, L.; Honeycutt, N. Weaponizing the IRB 2.0; Unsafe Science, 14 July 2024. [Google Scholar]

- Klitzman, R. The ethics police? The struggle to make human research safe; Oxford University Press, 2015. [Google Scholar]

- Levari, D. E.; Gilbert, D. T.; Wilson, T. D.; Sievers, B.; Amodio, D. M.; Wheatley, T. Prevalence-induced concept change in human judgment. Science 2018, 360(6396), 1465–1467. [Google Scholar] [CrossRef] [PubMed]

- Mason, P. The IRB protection racket. Inquisitive. 20 November 2024. Available online: link to the article (accessed 17 September 2025).

- Meyer, J. A. Werner Forssmann and catheterization of the Heart. Annals of Thoracic Surgery 1990, 49(3), 497–499. [Google Scholar] [CrossRef] [PubMed]

- Miall, R. C.; Afanasyeva, D.; Cole, J. D.; Mason, P. Perception of body shape and size without touch or proprioception: Evidence from individuals with congenital and acquired neuropathy. Experimental Brain Research 2021a, 239(4), 1203–1221. [Google Scholar] [CrossRef] [PubMed]

- Miall, R. C.; Afanasyeva, D.; Cole, J. D.; Mason, P. The role of somatosensation in automatic visuo-motor control: A comparison of congenital and acquired sensory loss. Experimental Brain Research 2021b, 239(7), 2043–2061. [Google Scholar] [CrossRef] [PubMed]

- Moreno, J. Goodbye to all that. The end of moderate protectionism in human subjects research. Hastings Center Report 2001, 31(3), 9–17. [Google Scholar] [CrossRef] [PubMed]

- Morris, E. Overregulation in science; Quillette, 2023. [Google Scholar]

- NIH. Definition of human subjects research; n.d. Available online: link to the article (accessed 17 September 2025).

- Schupmann, W.; Moreno, J. D. Belmont in context. Perspectives in Biology and Medicine 2020, 63(2), 220–239. [Google Scholar] [CrossRef] [PubMed]

- Scientists Observe Living Brain’s Receptors. Baltimore sun; Scientists Observe Living Brain’s Receptors, 20 September 1983. [Google Scholar]

- Shweder, R. A. Protecting human subjects and preserving academic freedom: Prospects at the University of Chicago. American Ethnologist 2006, 33(4), 507–518. [Google Scholar] [CrossRef]

- Size and Growth of Administration and Bureaucracy at Yale. Draft report to yale faculty of arts and sciences senate. 2022. Available online: link to the article (accessed 17 September 2025).

- US Open Letter. 15 July 2020. Available online: link to the article (accessed 17 September 2025).

- Wagner, H. N., Jr.; Burns, H. D.; Dannals, R. F.; Wong, D. F.; Langstrom, B.; Duelfer, T.; Frost, J. J.; Ravert, H. T.; Links, J. M.; Rosenbloom, S. B.; Lukas, S. E.; Kramer, A. V.; Kuhar, M. J. Imaging dopamine receptors in the human brain by positron tomography. Science 1983, 221(4617), 1264–1266. [Google Scholar] [CrossRef] [PubMed]

- Yoder, K. K.; Morris, E. D.; Constantinescu, C. C.; Cheng, T. E.; Normandin, M. D.; O’Connor, S. J.; Kareken, D. A. When what you see isn’t what you get: Alcohol cues, alcohol administration, prediction error, and human striatal dopamine. Alcohol, Clinical and Experimental Research 2009, 33(1), 139–149. [Google Scholar] [CrossRef] [PubMed]

© 2025 Copyright by the authors. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).